Autonomous Reconnaissance Robot

Some of the contents in this page is based on the following resource:

Report

Introduction

Amid disaster response scenarios, the inherent danger and operational challenges often render it impractical for first responders to execute missions effectively. This situation highlights the indispensable role of autonomous robots as viable assets in such contexts. This project aimed to integrate autonomous navigation and object detection onto the open-source robot platform, TurtleBot3. Its objective was to conduct a reconnaissance mission, enabling the robot to map closed and unknown environments while identifying and pinpointing the poses of victims represented by AprilTags.

Robot Hardware Setup

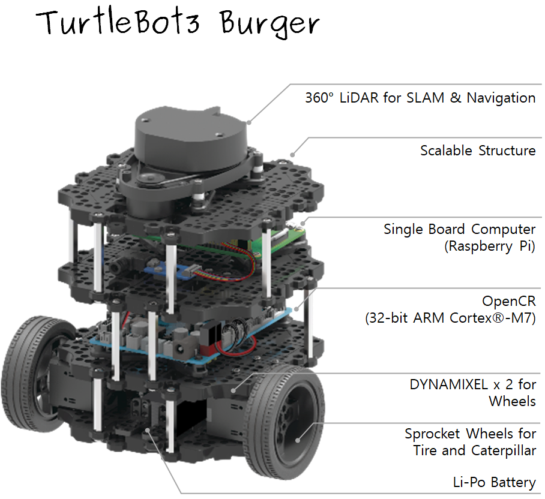

Fig. 1: TurtleBot3 components [1].

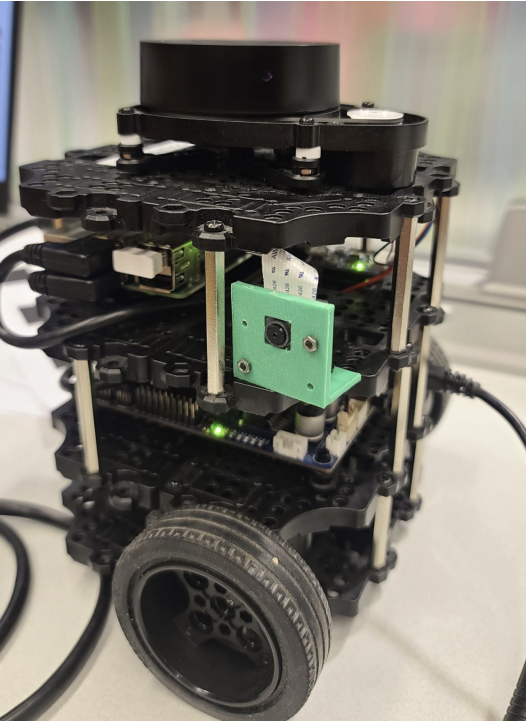

The robot comprised of components in Fig. 1 and an additional Pi camera was mounted and connected to the SBC to detect AprilTags as shown in Fig. 2 below.

Fig. 2: Pi camera mounted on TurtleBot3.

Robot Software Setup

In order to implement the sensors on the robot and create an effective development environment, several setups were required. First, a personal computer needed to have Ubuntu 20.04 and ROS Noetic installed for running ROS Master remotely. Dependant ROS packages and TurtleBot3 packages were installed as well. Secondly, the SBC’s microSD was burned with Raspberry Pi ROS Noetic image. Thirdly, required packages for the OpenCR firmware were installed on the SBC and uploaded to the OpenCR. Lastly, intrinsic camera calibration of the Pi camera was performed using a checkerboard. More detailed setup tutorials can be found here.

Autonomous Navigation

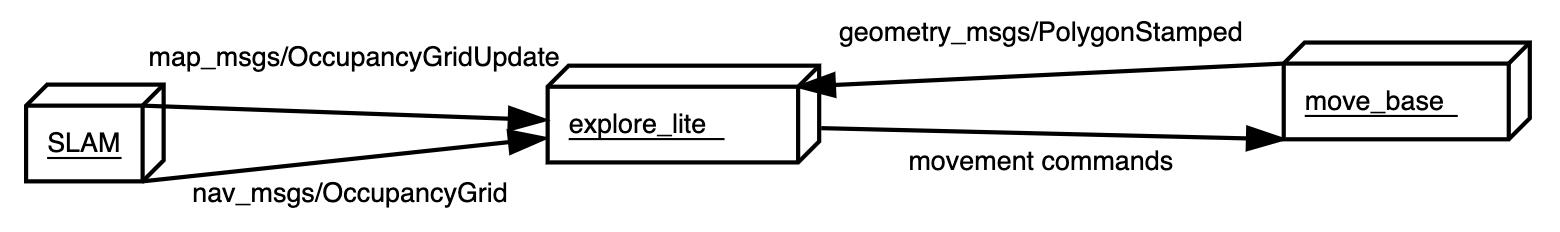

Fig. 3: ROS node architecture of explore_lite package [2].

The robot navigated a closed and unknown environment with the ROS packages in Fig. 3. SLAM was achieved by Gmapping SLAM with the LiDAR on the robot and it generated an occupancy grid map of the environment. explore_lite package implemented Frontier-Based Exploration based on the occupancy grid map and published movement commands to move_base node which calculated and controlled accurate robot wheel speeds from the movement commands.

AprilTag Detection

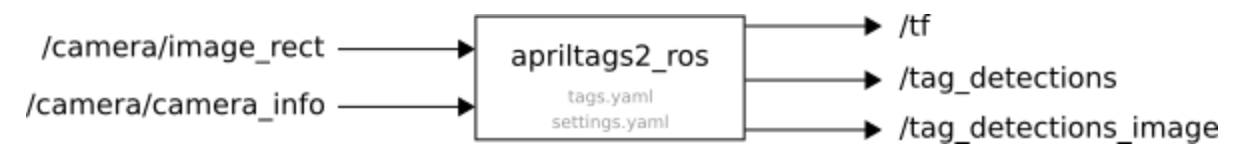

Fig. 4: ROS node architecture of apriltags2_ros package [3].

AprilTags were detected by the Pi camera with the ROS package in Fig. 4. The intrinsic camera parameters calculated from the software setup were used to calculate poses of the tages with respect to the camera frame. Extrinsic camera calibration was not necessary since the size of the tags were known and incorporated in the ROS package.

The poses of the tags had to be transformed from the camera frame to the map frame so that the tags could be displayed on the occupancy grid map. The transformation matrix between the camera frame and robot frame was calculated by accurately measuring the location of the camera on the robot. The other transformation matrices were provided by apriltags2_ros and Gmapping SLAM node.

Reconnaissance Mission Result

A closed environment with AprilTags attached on the walls was created as shown in Fig. 5.

Fig. 5: Testbed for robot's reconnaissance mission.

The robot conducted its reconnaissance mission in two phases. In the first phase, the robot explored the test environment efficiently with the navigation nodes and generated an occupancy grid map of the environment as shown in Fig. 6

Fig. 6: Occupany grid map of testbed generated by robot.

Video 1: Running Frontier-Based Exploration on TurtleBot3.

Video 2: Frontier-Based Exploration displayed on Rviz.

Video 1 and 2 showed demonstrations of the first phase of the reconnaissance mission in the testbed and on Rviz.

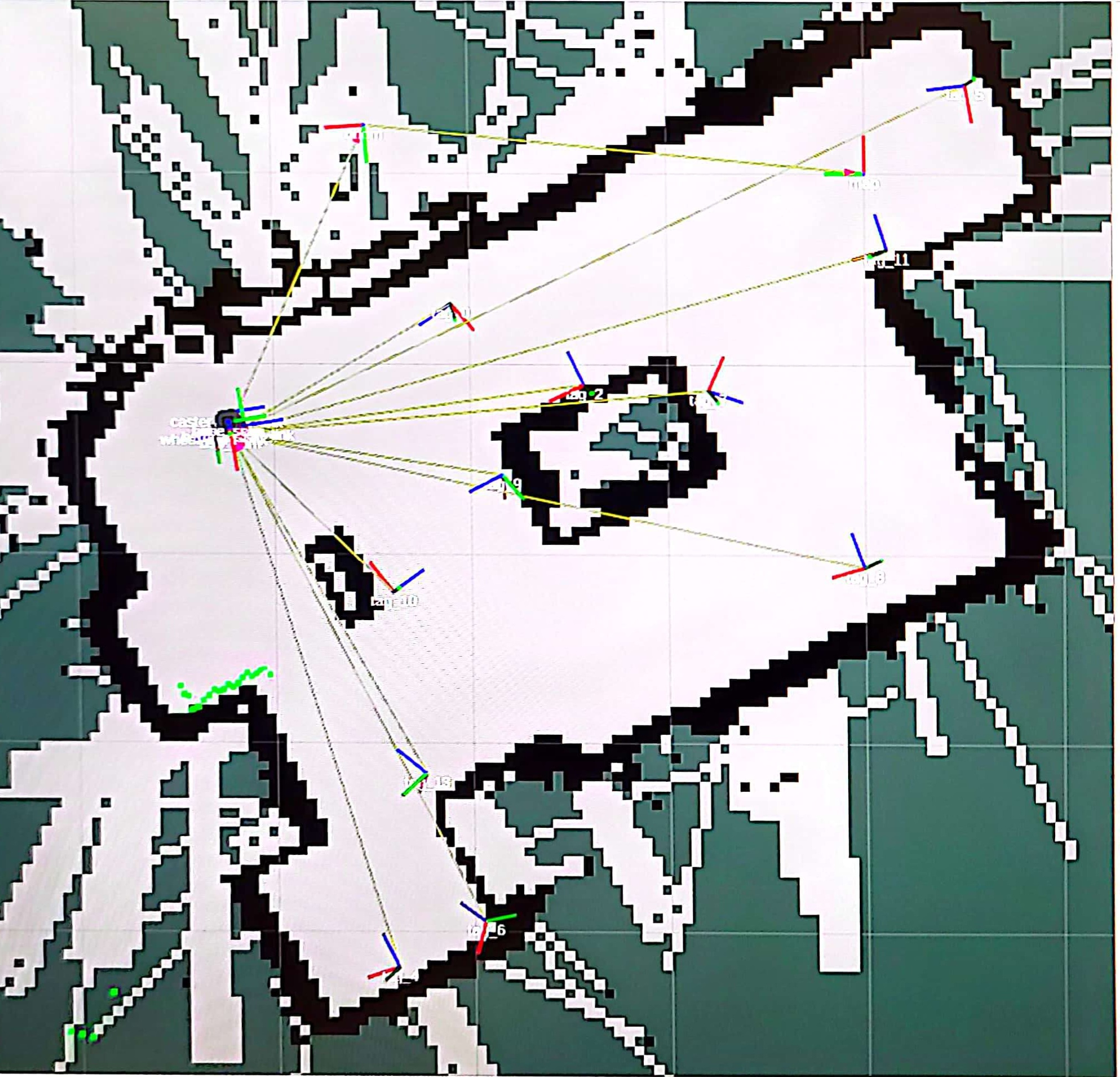

In the second phase, the robot concentrated on locating the tags and returning their poses on the map generated in Fig. 6. Given the map, every wall of the testbed was scanned by the robot. Since the camera was mounted on the right side of the robot, the walls which were occupied grids in the map could be scanned thoroughly by navigating the robot along the walls in a certain direction. Whenever the tags appeared in the camera, their poses were transformed to the map frame and recorded as shown in Fig. 7 below.

Fig. 7: Poses of tags displayed on occupancy grid map.

Some poses of the tags were not on the walls accurately and this was due to drift errors of the robot poses after driving for a while.

Video 3: Running AprilTags detection on TurtleBot3.

Video 4: AprilTags detection displayed on Rviz.

Video 3 and 4 showed demonstrations of the second phase of the reconnaissance mission in the testbed and on Rviz. All of the tags in the testbed were detected and displayed on the map. Therefore, the robot successfully executed its mission.

References

[1] ROBOTIS e-Manual, “TurtleBot3 Specifications,” Available: https://emanual.robotis.com/docs/en/platform/turtlebot3/features/#specifications.

[2] ROS Wiki, “explore_lite,” Available: http://wiki.ros.org/explore_lite.

[3] ROS Wiki, “apriltag_ros,” Available: http://wiki.ros.org/apriltag_ros.