Projects

ToddlerBot 2.0: Open-Source ML-Compatible Humanoid Platform

Dec 2024 - Aug 2025

Robotics and Embodied Artificial Intelligence (REAL) Lab and The Movement Lab, Stanford University

- Contributed to the 2.0 release by improving the custom keyframe animation app and adding non-physics motion generating and RL training tools.

- Built an RL training pipeline with custom terrain generation, integrated stereo depth perception using the deep learning model FoundationStereo, and added RGB-D rendering and elevation mapping features in simulation.

Jan 2025 - Mar 2025

- Developed a lightweight CNN to predict image-specific scaling factors for Depth Anything V2, addressing scale ambiguity in monocular depth estimation.

- Reduced AbsRel error by 43% and doubled δ₁ accuracy on NYU Depth V2 test set compared to unscaled baseline.

Sep 2024 - Dec 2024

- Trained DQN (MDP) and DRQN (POMDP) agents in SUMO simulator for autonomous intersection navigation with agents of unknown driving styles.

- Achieved 88% success rate; DRQN learned to infer latent driver behaviors using recurrent memory for safer decision-making.

Sep 2024 - Dec 2024

- Implemented a Mini-UNet affordance model for robotic grasping, achieving 86.7% success on seen and 76.7% on unseen objects.

- Added test-time improvement to prevent repeated failed grasps by updating affordance maps dynamically.

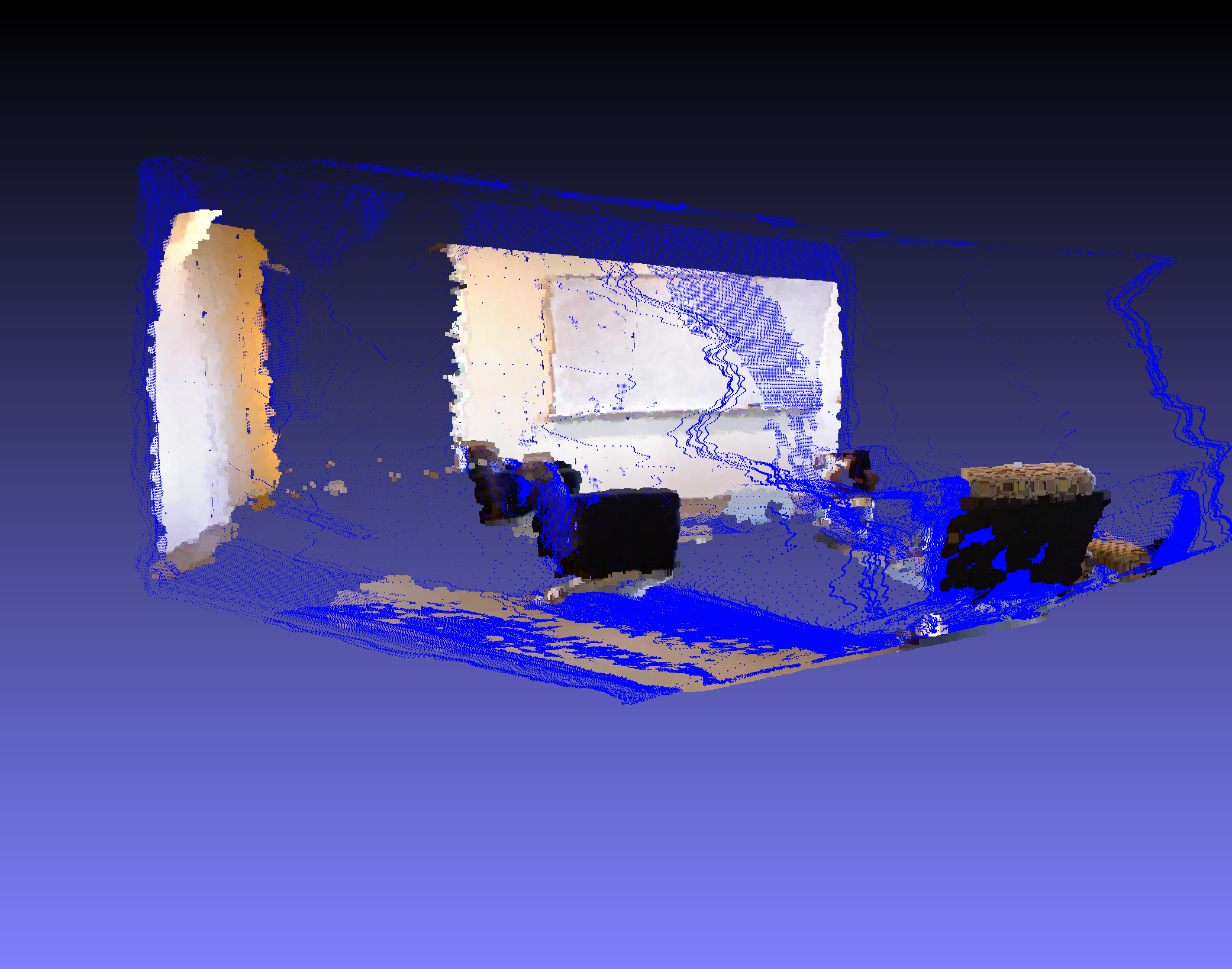

Go1 DLIOM-Based Autonomous Navigation

Dec 2023 - Aug 2024

Autonomy & Intelligence Lab, Northeastern University

- Configured Unitree Go1 robot dog with 3D LiDAR sensor fixture and ROS2 environment on robot's Jetson board.

- Integrated state estimation from DLIOM (Direct LiDAR Inertial Odometry and Mapping) with ROS2 Nav2.

- Achieved robot navigation with custom nodes that convert velocities from Nav2 to robot's high-level SDK commands.

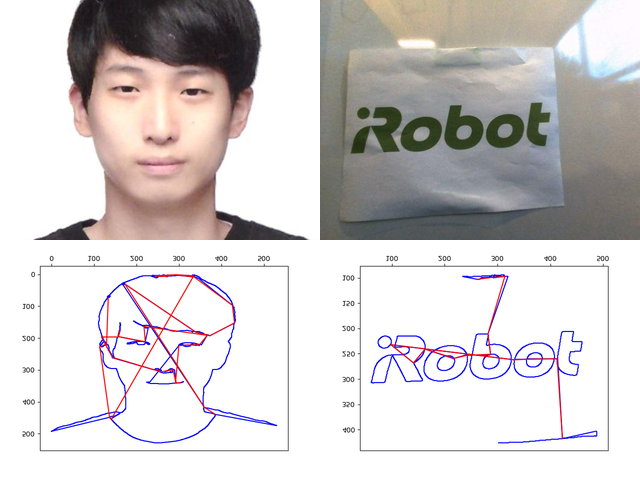

Jul 2023 - Aug 2023

- Created a program that converted input images to robot drawing trajectories.

- Supported images uploaded by users or captured with a robot's fisheye camera.